Background

Many governments support scientific research with public funds, either because science in and of itself is considered a public good (Stiglitz, 1999) or out of a desire to produce actionable science that can be integrated into policy and practice by government representatives, governmental agencies, news outlets, communities, and other interested organizations and individuals (Smits and Denis, 2014; Wyborn et al., 2019). Generally, there is strong support for the use of public funds for actionable research (Kennedy, 2018; Yin et al., 2022), but the breadth and depth of potential user groups raises questions regarding how scientific projects are chosen for funding, which individuals or organizations set research priorities, and whether those priorities are set in a manner that is focused on specific local issues or are more broad and centralized.

Funding agencies can perform important priority-setting roles, either in response to internal agency directives or political influences, and/or based on relationships with scientists who work in the field (Potì and Reale, 2007). Depending on the specific call for research proposals, the topics explored in research projects may be identified by any combination of the team conducting the work, the funding organization’s program managers, potential users of the generated information, policymakers, or other interested parties. Each of these models has strengths and weaknesses, but there is a possibility that decision-making and priority setting, if too centralized and top down, may be too rigid and inflexible to spur breakthrough research in important areas (Azoulay et al., 2019).

The National Sea Grant College Program (“Sea Grant”) addresses this concern with a model for adaptively funding research to address local priorities. Sea Grant is a NOAA-funded network of 34 university-based programs in every coastal and Great Lakes state of the continental United States, plus the US territories of Guam and Puerto Rico. Sea Grant’s funding comes from NOAA Oceanic and Atmospheric Research (OAR or “NOAA Research”) and approximately 95% of Sea Grant’s funding is distributed to the state Sea Grant programs. Sea Grant programs are supported through partnerships involving states, universities, and locally targeted federal funding. Since the founding of the Sea Grant program in 1966, 10% to 80% of each state program’s annual funding has supported competitive research, with a current target of 30% to 50% of a program’s core funds dedicated to research (Pennock, 2022). In addition, staff at individual Sea Grant programs may perform research as part of their job duties.

Sea Grant funding is intentionally flexible: though research proposals to Sea Grant programs must undergo a rigorous, formal peer review process following the guidelines in the National Sea Grant Competition Policy (National Sea Grant College Program, 2022), individual programs have latitude to identify locally relevant topical research priorities. This latitude is unusual for a federal funding agency. Topical priorities for a given competition are typically shaped with input from program advisory committees, program staff, and, at times, the US Congress.

Historically, national research priorities have included aquatic invasive species management, aquaculture, and aquatic organisms such as highly migratory species of fishes or American lobster (Homerus americanus). More recently, there has been a particular focus on research to improve resiliency (e.g., in response to coastal hazards) and a general movement toward incorporating social science and encouraging interdisciplinary teams. These national research priorities trickle down to local Sea Grant programs; however, Sea Grant programs may (and typically do) supplement or supplant these with locally relevant priorities. Beyond the quality of the ideas proposed and the qualifications of the researchers involved, successful proposals in each competition often have well-developed local partnerships, identify clear end users of the information that will be generated, and include co-development of research proposals with these end users.

There is potential tension between producing work that is scientifically impactful and producing work that is a useful public good, and there is a need to study the effects of funding important locally driven work on scientific output. Others have examined the impacts of different funding models on research outputs and individual researchers (e.g., Smits and Denis, 2014; Bloch and Schneider, 2016; Heyard and Hottentrott, 2021), focusing on funding at the scale of either entire countries or very large funding agencies within a country. In this manuscript, we use Sea Grant research as a case study in targeting smaller allocations of research funds toward locally defined issues. Our analysis is guided by the following research questions:

- How has Sea Grant-funded research contributed to the broader scientific literature in terms of bibliometrics and impact?

- How does Sea Grant-funded research compare to other NOAA research in terms of bibliometrics, impact, and cost effectiveness?

- To what research topics have Sea Grant-funded publications contributed?

Methods

For our case study, we assembled a database of Sea Grant-funded research publications for bibliometric and topic analysis. We defined Sea Grant-funded research publications as peer-reviewed publications that were either written by Sea Grant-affiliated researchers or funded at least in part by Sea Grant programs. To find Sea Grant research publications, we searched Clarivate Web of Science for “Sea Grant” in either the “author address” or “funding agency” fields. We performed the search on August 22, 2023. This search yields an incomplete record of Sea Grant research for several reasons: Web of Science funding source information is inconsistent in publications prior to 2008, authors may have failed to list Sea Grant as a funding source, and there is a lack of backup information from Sea Grant programs (which often are not notified by previously funded project PIs about publications). However, based on conversations with Sea Grant programs and National Sea Grant Office staff, we are confident that our search results include a large portion of the overall population of Sea Grant research publications since 2008 and as many articles as feasible from prior years. We also note that Sea Grant is often one of several funding agencies acknowledged in the set of publications found through our search.

For each Sea Grant research publication, we downloaded complete records from Web of Science and retained the following fields for analysis:

- Title

- Author(s)

- Author affiliation(s)

- Journal title

- Publication year

- Categories, which includes Web of Science-generated categories for the journal that the publication appeared in. Articles may have more than one category.

- Keywords as supplied by the author(s).

- Total citations

- Abstract, which includes the full text of the abstract.

For bibliometric and citation analyses, we calculated total, mean, and median citations per article, and cumulative h-index (the number of papers that received h or more citations) and g-index (the number of papers that received at least g2 citations, following Egghe, 2006) for the corpus. We also calculated the i10-index, Google Scholar’s basic index of number of publications with 10 or more citations.

We characterized research topics in two ways. First, we analyzed abstract text, which was available consistently starting in 1991. For this analysis, we split each abstract into a series of individual words and two-word phrases (“bigrams”), following Silge and Robinson (2017). To prevent the abstract analysis from being dominated by irrelevant information, we removed common words associated with the publication process (e.g., “copyright” or “Elsevier”), and ~1,150 common “stop words,” which include common verbs, adverbs, adjectives, pronouns, articles, and other common words that are not useful for distinguishing text but occur frequently (Silge and Robinson, 2017). Second, we analyzed author-supplied keywords, which were available consistently starting in 2007 and sporadically prior to that. Note that “keywords” are often phrases (e.g., “climate change”) and that articles could have one or more keywords associated with them. We considered each keyword (or phrase) separately for our analysis. In both cases, we used the R tidytext package to perform the analysis (Silge and Robinson, 2016). We also performed data cleaning and analysis using R version 4.3.1 (R Core Team, 2023) and the ggplot2 (Wickham, 2016), dplyr (Wickham et al., 2021), viridis (Garnier et al., 2023), here (Müller, 2020), and jscTools (developed by author Carlton in 2020).

A full topic analysis of broader NOAA research publications is beyond the scope of this article, but to compare research productivity between Sea Grant funding and general NOAA research funding, we repeated the Web of Science search on December 11, 2023, using the search terms “NOAA” or “National Oceanic and Atmospheric Administration” in either the “author address” or “funding agency” fields. We restricted this search to the years 2016–2023, which are the years that NOAA supplied research funding totals in their congressional justifications (available at https://www.noaa.gov/organization/budget-finance-performance/budget-and-reports). To analyze productivity and funding levels, we compared NOAA research budgets to Sea Grant appropriations from 2016 to 2023.

Findings

Publication Metrics

The sample contained 7,423 Sea Grant publications across 1,187 journals in 164 unique Web of Science categories published between 1973 and 2023. The top five most common journals were Marine Ecology Progress Series (231 articles), Journal of Great Lakes Research (179), PLOS ONE (166), Estuaries and Coasts (162), and Canadian Journal of Fisheries and Aquatic Sciences (116).

The Sea Grant sample had 37,960 total authors and 20,197 unique authors. The average number of authors per publication was 5.11 (SD: 12.0) and the median was 4; over 99% of publications had multiple authors. The maximum number of authors for a single publication (Alves da Rosa et al., 2020) was 642. The average number of authors per publication is increasing: the Spearman rank correlation between publication year and number of authors is 0.13 (n = 7,423 articles between 1973 and 2023; p ≤0.001).

Of the unique authors, 13,773 had a single Sea Grant research publication and 6,424 had multiple publications. The mean publications per author was 1.88 (SD: 2.38), the median was 1. The most prolific author had 60 publications.

Research Impact

Sea Grant publications were cited 189,898 times (average: 25.6 per article; median: 12 per article). In aggregate, Sea Grant publications have an h-index of 150, a g-index of 239, and an i-10 index of 4,173. The online supplementary Table S1 lists the 10 most-cited publications.

Comparing Sea Grant and NOAA Research Funding

Sea Grant publications are a subset of NOAA publications. From 2016 to 2023 (the years for which budget information was available), there were 4,085 Sea Grant publications and 23,280 NOAA publications. In this time frame, Sea Grant publications were cited 63,433 times (average: 15.5 per article; median: 7), had an h-index of 86, an i-10 index of 1674, and a g-index of 144. NOAA publications were cited 512,779 times (average: 22.0 per article; median: 7), had an h-index 207, an i-10 index of 11,570, and a g-index of 343.

According to NOAA congressional budget justifications, Sea Grant’s total funding for the period 2016–2023 was approximately $559,559,000. Assuming Sea Grant programs use an average of 40% of the budget for research (per the Sea Grant research allocation policy; Pennock, 2022), this yields a Sea Grant research budget of $223,823,600 compared to overall NOAA research funding of $3,604,941,000. As a rough efficiency measure, there was one Sea Grant publication per $54,792 in research funding and one citation per $3,529. There was one NOAA publication per $154,851 in NOAA research funding and one citation per $7,030.

Topic Analysis

Abstract Analysis

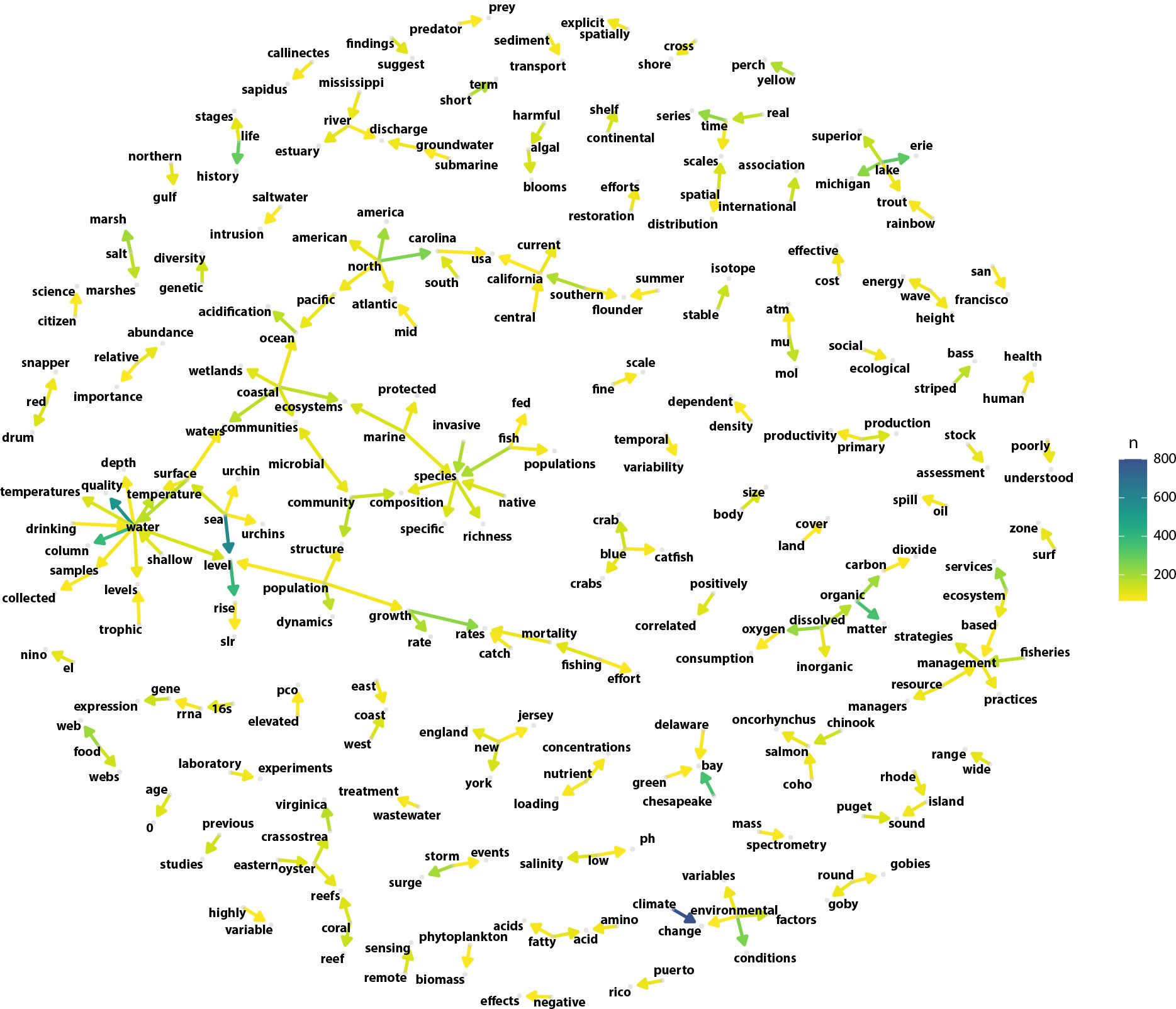

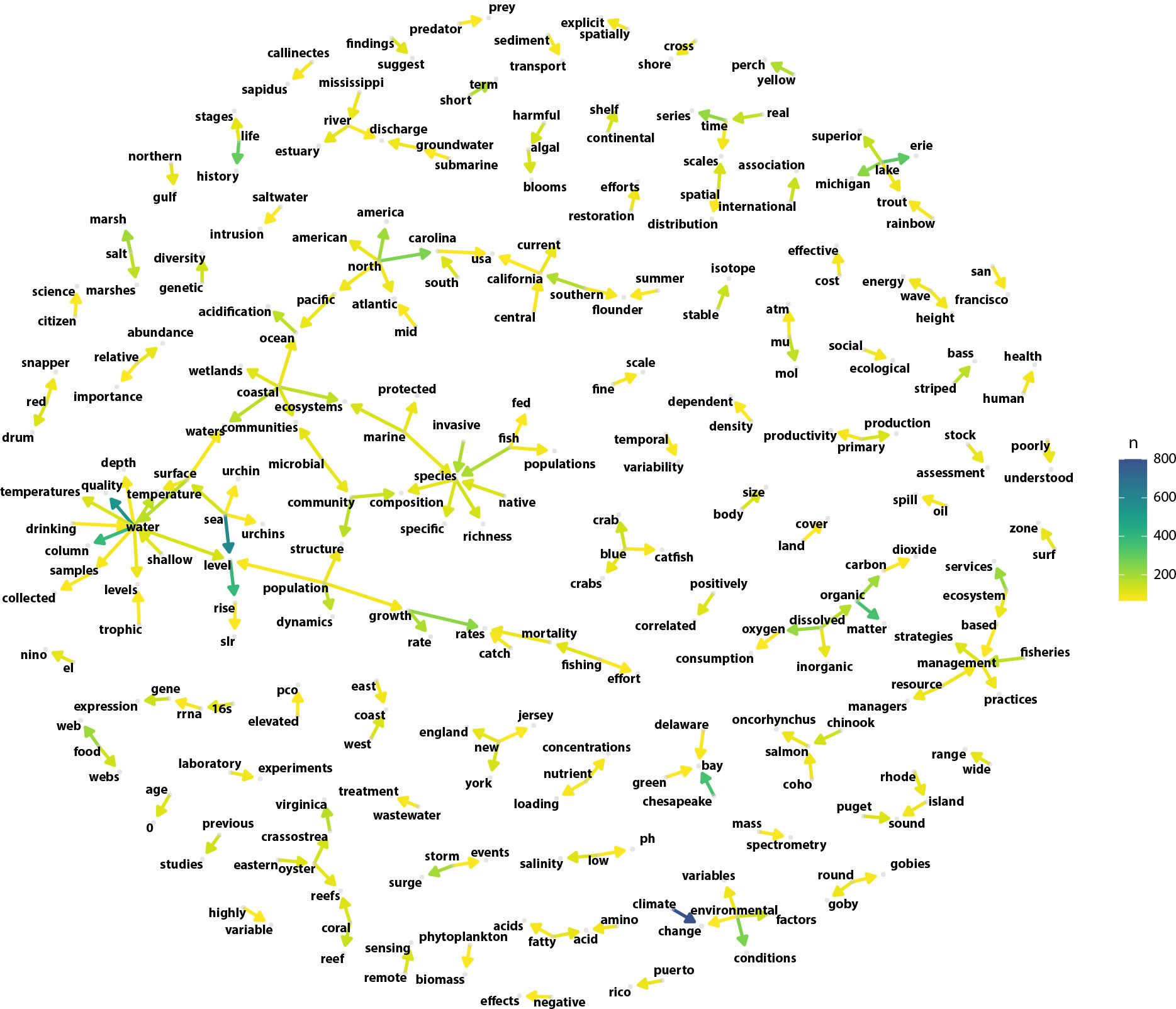

Abstracts appear in articles starting in 1991. The 10 most common bigrams in abstracts between 1991 and 2022 (the last complete year in our sample) are listed in Table S2 (n = 6,871 articles). Bigrams that appear more than 60 times are visualized in Figure 1.

FIGURE 1. Network graph of bigrams that appear in abstracts 60+ times in the 7,165 Sea Grant-funded research publications from 1991 to 2023. Arrow color indicates a greater number of instances of this bigram occurring throughout the data set. > High res figure

|

Keyword Analysis

Keywords began appearing consistently in the Web of Science database in 2008. Approximately 72% of the Sea Grant publications had author-supplied keywords; those articles had 15,193 different keywords used a total of 32,855 times. When keywords were listed, the mean number of keywords per article was 5.1 (SD = 3.9); the median was 5. Keywords are more prevalent later in the data set: the average publication year for articles with keywords was 2016, compared to 2012 for articles without keywords.

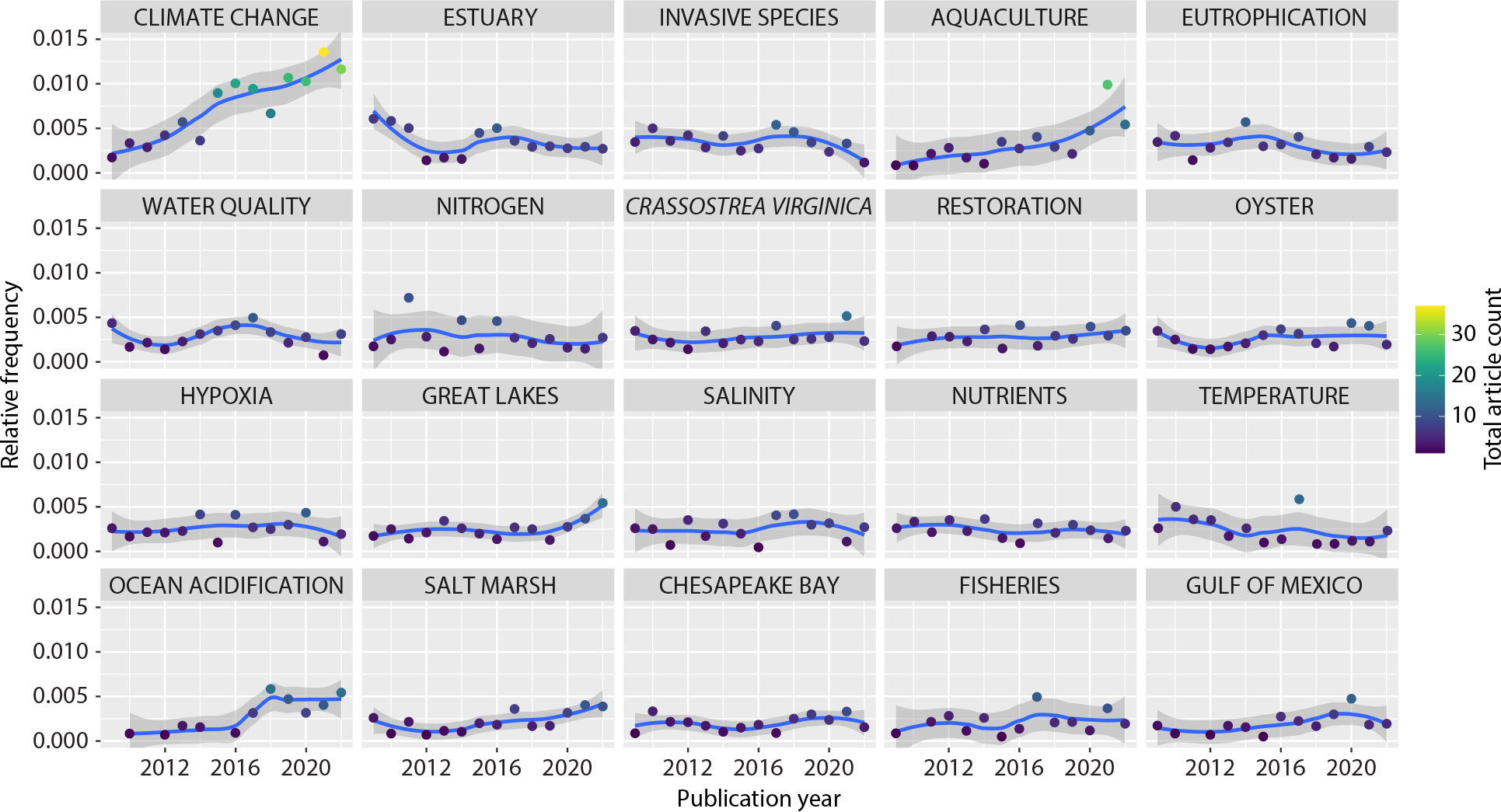

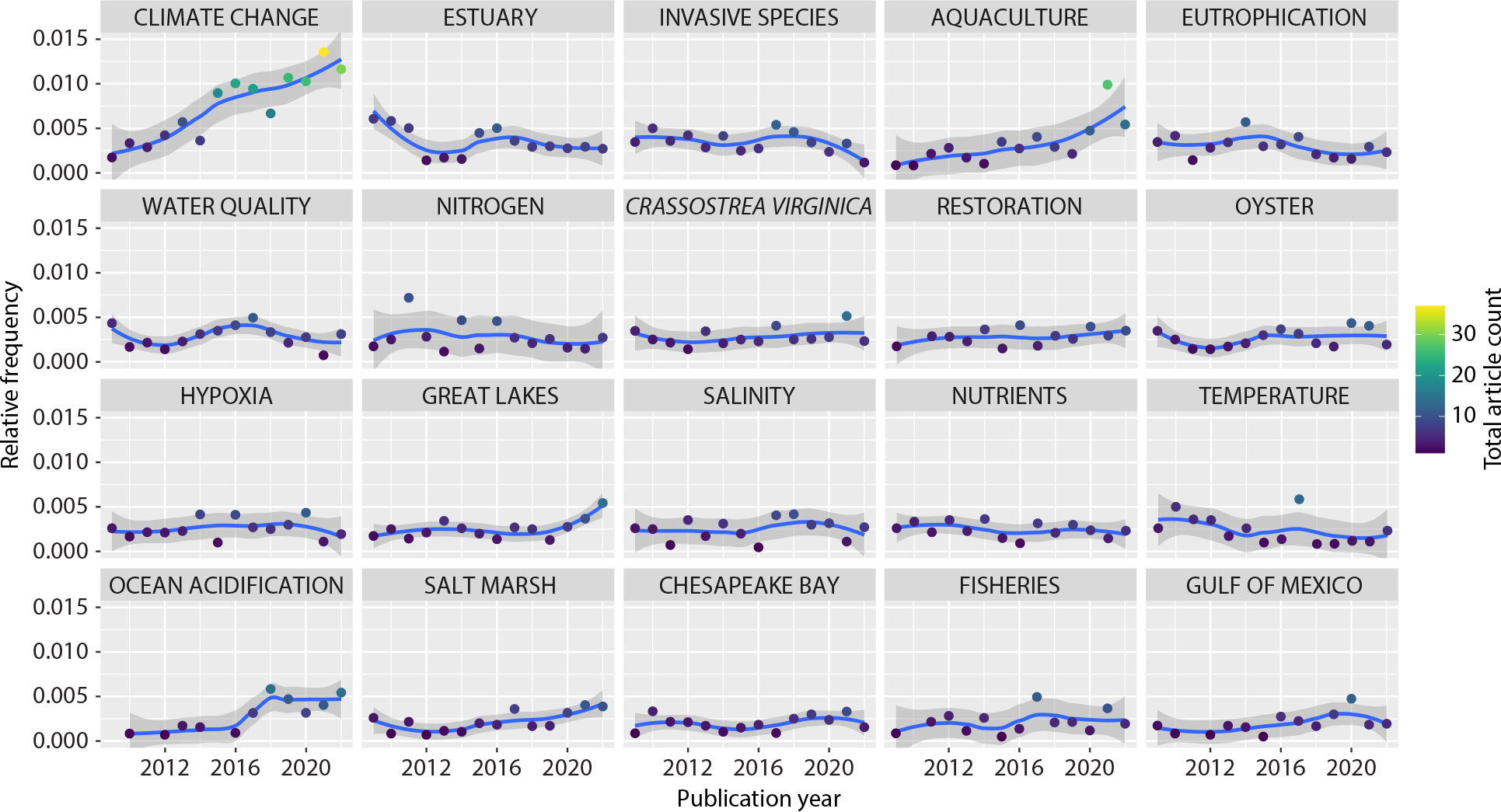

The 20 most common keywords or phrases are listed in Table S3 and their relative prevalence between 2008 and 2022 is visualized in Figure 2.

FIGURE 2. Relative frequency of the top 20 author-supplied keywords from 2008 to 2022. Dots represent individual data points and the line a LOESS regression line with a 95% confidence interval. > High res figure

|

Discussion

Sea Grant research has contributed to the scientific literature across a variety of topics and disciplines. The primary contributions have been to coastal, marine, and aquatic sciences, as evidenced by the fact that four of the top five most common journals where Sea Grant work has been published (Marine Ecology Progress Series, Journal of Great Lakes Research, Estuaries and Coasts, and Canadian Journal of Fisheries and Aquatic Sciences) are directly related to those disciplines. However, Sea Grant research also appears in discipline-spanning journals, including high-impact journals such as Science (n = 12 articles), Nature (n = 5), and Proceedings of the National Academy of Sciences of the United States of America (n = 31).

The publications were written by a broad group of authors. While the number of authors per article tracks prior research on science manuscript authorship (e.g., Wuchty et al., 2007), the publications per author is lower than in other studies (between 5 and 7; Newman, 2004). This is not unexpected: Sea Grant-supported work is only a part of most authors’ research portfolios and Sea Grant-funded manuscripts are a subset of most authors’ total publications. In addition, applied research projects, such as many Sea Grant projects, may support students whose careers take them outside of academia and other research-focused enterprises and into positions where peer-reviewed publications are less of a focus of the job.

The increase in coauthors over time reflects a trend of increased coauthorship in the sciences (e.g., Hancean et al., 2021, found that the number of authors per paper among the most productive European researchers increased from 4.2 in 2007 to 6.6 in 2017 and 7.9 in 2018). The tendency for the papers we analyzed to have large groups of coauthors may also stem from Sea Grant-specific factors: 50% cost sharing is often required for Sea Grant research funding, and Sea Grant researchers often use Sea Grant funds to extend other research funds. Additionally, Sea Grant research tends to be applied, which may result in interdisciplinary project teams that spread research funds and authorship credit across multiple team members.

The Sea Grant approach of funding research to address local needs is evident in the keyword data. The top 20 keywords include “Great Lakes” (home to the Minnesota, Wisconsin, Illinois-Indiana, Michigan, Ohio, Pennsylvania, and New York Sea Grant programs), “Chesapeake Bay” (Maryland, Delaware, and Virginia Sea Grant programs), and “Gulf of Mexico” (Texas, Louisiana, Mississippi-Alabama, and Florida Sea Grant programs) in addition to words that reflect issues of local importance across Sea Grant programs (e.g., “oyster” and “Crassostrea virginica,” the scientific name for the Eastern oyster; “fisheries” and “aquaculture,” which have been a focus throughout Sea Grant’s history; “invasive species,” a particular focus of the Great Lakes Sea Grant programs but also important nationally). However, the keyword data suggest at least some of the locally focused Sea Grant research is couched within broader topics, including “climate change,” “estuary” ecology, and “ocean acidification.” That is, while local foci are evident, the research also addresses broad, critical environmental issues of our time.

Although this analysis is limited by the fact that we were only able to obtain 15 years of abstract data, the timing of the prevalence of different keywords reveals how funding foci can influence the scientific literature. Some keywords, such as “Chesapeake Bay,” have been important to Sea Grant programs for decades, and are consistently prevalent throughout the abstract data set (Figure 2). Other keywords may reflect changes in funding focus over time. For example, in recent years, Sea Grant has increased its institutional focus on aquaculture (e.g., through annual special funding initiatives starting in 2018), which likely contributed to the substantial increase in “aquaculture” in the later years of the keyword data set. The keywords also appear to reflect general trends in environmental research, such as an increased focus on “climate change” and “ocean acidification” in the last several decades and an increased focus on restoration work in the “Great Lakes” after the 2010 Great Lakes Restoration Initiative and the 2012 update to the Great Lakes Water Quality Agreement. The importance of key individuals to this analysis is also worth considering. For example, the researcher with the most Sea Grant research publications (60) is a fish ecologist who focuses on the Great Lakes and started working with Sea Grant in 2010, which likely contributed to the prevalence of “Great Lakes” in the keywords. Though we did not explore specific relationships and timing between funding foci, funding decisions, and manuscript publication, it seems evident that funding organizations’ decisions at the onset of any given opportunity influence what science is published.

By examining the network graph of the abstract bigram data, we can refine our understanding of Sea Grant research. The data suggest a number of topics related to traditional Sea Grant focus areas that are also national efforts, such as water availability and quality (e.g., “water quality,” “water temperature,” “drinking water,” “nutrient loading”) and fisheries (e.g., “fisheries management,” “stock assessment,” “marine protected (area)”), and a number of phrases specific to a given technique, analysis, or scientific discipline (e.g., “gene expression,” “low pH,” “μm mol,” “positively correlated”). In keeping with the Sea Grant framework, these phrases suggest that Sea Grant-funded research involves both key issues being addressed by environmental scientists and connection to specific local contexts, as reflected in the presence of location names (e.g., “Narragansett Bay,” “Green Bay,” “Lake Erie,” “Puget Sound,” “Mississippi River”) and individual species names (e.g., “red snapper,” “red drum,” “Callinectes sapidus,” “sea urchin,” and different types of salmon). In addition, the data seem to reflect Sea Grant’s focus on usable science (e.g., “resource managers,” “resource management,” “decision,” “practices,” and “strategies”), and Sea Grant’s ability to be responsive to individual incidents (e.g., “algal bloom,” “oil spill,” or “storm surge”).

While the data do not allow us to make detailed comparisons between Sea Grant and other funding models, the coarse comparison to broader NOAA research funding is instructive. There are several important caveats to these comparisons: our budget estimates are approximate, Sea Grant and NOAA were not the sole funding source for many of the publications in our database, and much of NOAA research funding might go to internal research (e.g., weather research) that might not be shared via peer-reviewed publications. Conversely, Sea Grant funding often supports academic researchers who have a primary goal to share work via peer-reviewed publications. At the same time, a substantial portion of Sea Grant work may end up in the gray literature (i.e., technical reports, conference proceedings, or extension publications), where findings may influence day-to-day decisions of managers without becoming part of the broader peer-reviewed scientific literature.

With the important caveat that cost efficiency is not the sole measure of research funding success and that it should be considered alongside other, program-specific measures of success and productivity, our analyses suggest that Sea Grant research yielded substantially more publications and citations per dollar of funding compared to overall NOAA research funding. Furthermore, our cost-efficiency metrics likely underestimate Sea Grant’s efficiency, as most programs direct closer to 30% of their budgets to research funding rather than the 40% we assumed. The notably higher efficiency from Sea Grant funding is interesting and bears further study. What types of funding models tend to produce output most efficiently? How does it vary by output measure (e.g., number of publications, citations, or other measures of impact beyond publication metrics) and by discipline? Further exploring these sorts of questions could help policymakers and agencies better understand how to structure their research funding programs to maximize different types of impact.

In all, our case study suggests that the Sea Grant framework of a national office providing funding to individual programs while allowing them the flexibility to focus on place-based, usable science has allowed the programs to set priorities based on issues of local need in contrast to the more common, centralized emphases (Potì and Reale, 2007). Notably, this decentralized funding model does not appear to have precluded the research’s making a substantial contribution to the scientific literature. Sea Grant-funded research has been broadly published in both high impact and disciplinary journals, has been widely cited, and has funded scientists across many fields and career stages while being more cost-effective on a per-publication basis than other NOAA research funding. There are many models for funding scientific research, each of which has different strengths, weaknesses, and end goals. Based on our findings, Sea Grant research funding is a story of the positive power of leverage: leveraging local expertise and federal funds to address theoretical and applied research questions in service of the public good.

Acknowledgments

This work was funded by Illinois-Indiana Sea Grant, grant no. NA22OAR4170100. The authors declare no conflict of interest in this research. The lead author would like to thank E. Bo for assistance in manuscript development. Comments from three anonymous reviewers improved the manuscript and we acknowledge and appreciate their help.